John Mueller interview at SearchLove

I was fortunate enough to be able to interview Google’s John Mueller at SearchLove and quiz him about domain authority metrics, sub-domains vs. sub-folders and how bad is ranking tracking really.

I have previously written and spoken about how to interpret Google’s official statements, technical documentation, engineers’ writing, patent applications, acquisitions, and more (see: From the Horse’s Mouth as well as posts like “what do dolphins eat?”). When I got the chance to interview John Mueller from Google at our SearchLove London 2018 conference, I knew that there would be many things that he couldn’t divulge, but there were a couple of key areas in which I thought we had seen unnecessary confusion, and where I thought that I might be able to get John to shed some light.

Mueller is Webmaster Trends Analyst at Google, and these days he is one of the most visible spokespeople for Google. He is a primary source of technical search information in particular, and is one of the few figures at Google who will answer questions about (some!) ranking factors, algorithm updates and crawling / indexing processes.

Contents

- New information and official confirmations

- Learning more about the structure of webmaster relations

- More interesting quotes from the discussion

- Algorithm changes don’t map easily to actions you can take

- Do Googlers understand the Machine Learning ranking factors?

- Why are result counts soooo wrong?

- More detail on the domain authority question

- Maybe put your nfsw and sfw content on different sub-domains

- John can “kinda see where [rank tracking] makes sense”

- We’ve come a long way

- Personal lessons from conducting an interview on stage

- Things John didn’t say

New information and official confirmations

In the post below, I have illustrated a number of the exchanges John and I had that I think revealed either new and interesting information, or confirmed things we had previously suspected, but had never seen confirmed before on the record.

I thought I’d start, though, by outlining what I think were the most substantial details:

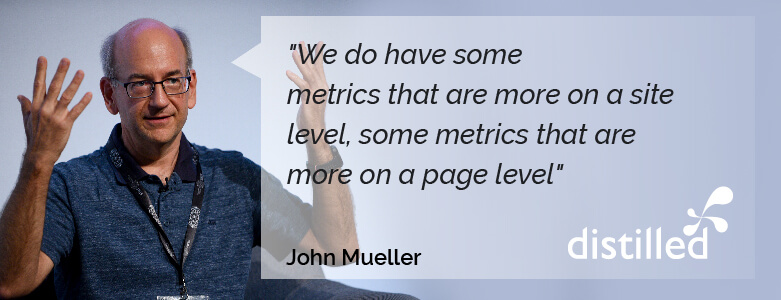

Confirmed: Google has domain-level authority metrics

We had previously seen numerous occasions where Google spokespeople had talked about how metrics like Moz’s Domain Authority (DA) were proprietary external metrics that Google did not use as ranking factors (this, in response to many blog posts and other articles that conflated Moz’s DA metric with the general concept of measuring some kind of authority for a domain). I felt that there was an opportunity to gain some clarity.

“We’ve seen a few times when people have asked Google: “Do you use domain authority?” And this is an easy question. You can simply say: “No, that’s a proprietary Moz metric. We don’t use Domain Authority.” But, do you have a concept that’s LIKE domain authority?”

We had a bit of a back-and-forth, and ended up with Mueller confirming the following (see the relevant parts of the transcript below):

- Google does have domain level metrics that “map into similar things”

- New content added to an existing domain will initially inherit certain metrics from that domain

- It is not a purely link-based metric but rather attempts to capture a general sense of trust

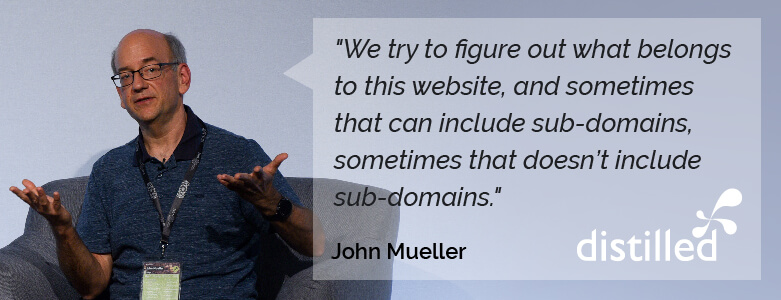

Confirmed: Google does sometimes treat sub-domains differently

I expect that practically everyone around the industry has seen at least some of the long-running back-and-forth between webmasters and Googlers on the question of sub-domains vs sub-folders (see for example this YouTube video from Google and this discussion of it). I really wanted to get to the bottom of this, because to me it represented a relatively clear-cut example of Google saying something that was different to what real-world experiments were showing.

I decided to set it up by coming from this angle: by acknowledging that we can totally believe that there isn’t an algorithmic “switch” at Google that classifies things as sub-domains and ranks them deliberately lower, but that we do regularly see real-world case studies showing uplifts from moving, and so asking John to think about why we might see that happen. He said [emphasis mine]:

in general, we … kind of where we think about a notion of a site, we try to figure out what belongs to this website, and sometimes that can include sub-domains, sometimes that doesn’t include sub-domains.

Sometimes that includes sub-directories, sometimes that doesn’t include specific sub-directories. So, that’s probably where that is coming from where in that specific situation we say, “Well, for this site, it doesn’t include that sub-domain, because it looks like that sub-domain is actually something separate. So, if you fold those together then it might be a different model in the end, whereas for lots of other sites, we might say, “Well, there are lots of sub-domains here, so therefore all of these sub-domains are part of the main website and maybe we should treat them all as the same thing.”

And in that case, if you move things around within that site, essentially from a sub-domain to a sub-directory, you’re not gonna see a lot of changes. So, that’s probably where a lot of these differences are coming from. And in the long run, if you have a sub-domain that we see as a part of your website, then that’s kind of the same thing as a sub-directory.

To paraphrase that, the official line from Google is:

- Google has a concept of a “site” (see the discussion above about domain-level metrics)

- Sub-domains (or even sub-folders) can be viewed as not a part of the rest of the site under certain circumstances

- If we are looking at a sub-domain that Google views as not a part of the rest of the site, then webmasters may see an uplift in performance by moving the content to a sub-folder (that is viewed as part of the site)

Unfortunately, I couldn’t draw John out on the question of how one might tell in advance whether your sub-domain is being treated as part of the main site. As a result, my advice remains the same as it used to be:

In general, create new content areas in sub-folders rather than sub-domains, and consider moving sub-domains into sub-folders with appropriate redirects etc.

The thing that’s changed is that I think that I can now say this is in line with Google’s official view, whereas it used to be at odds with their official line.

Learning more about the structure of webmaster relations

Another area that I was personally curious about going into our conversation was about how John’s role fits into the broader Google teams, how he works with his colleagues, and what is happening behind the scenes when we learn new things directly from them. Although I don’t feel like we got major revelations out of this line of questioning, it was nonetheless interesting:

I assumed that after a year, it [would be] like okay, we have answered all of your questions. It’s like we’re done. But there are always new things that come up, and for a lot of that we go to the engineering teams to kind of discuss these issues. Sometimes we talk through with them with the press team as well if there are any sensitivities around there, how to frame it, what kind of things to talk about there.

For example, I was curious to know whether, when we ask a question to which John doesn’t already know the answer he reviews the source code himself, turns to an engineer etc. Turns out:

- He does not generally attend search quality meetings (timezones!) and does not review the source code directly

- He does turn to engineers from around the team to find specialists who can answer his questions, but does not have engineers dedicated to webmaster relations

For understandable reasons, there is a general reluctance among engineers to put their heads above the parapet and be publicly visible talking about how things work in their world. We did dive into one particularly confusing area that turned out to be illuminating – I made the point to John that we would love to get more direct access to engineers to answer these kinds of edge cases:

Concrete example: the case of noindex pages becoming nofollow

At the back end of last year, John surprised us with a statement that pages that are noindex will, in the long run, eventually be treated as nofollow as well.

Although it’s a minor detail in many ways, many of us felt that this exposed gaps in our mental model. I certainly felt that the existence of the “noindex, follow” directive meant that there was a way for pages to be excluded from the index, but have their links included in the link graph.

What I found more interesting than the revelation itself was what it exposed about the thought process within Google. What it boiled down to was that the folk who knew how this worked – the engineers who’d built it – had a curse of knowledge. They knew that there was no way a page that was dropped permanently from the index could continue to have its links in the link graph, but they’d never thought to tell John (or the outside world) because it had never occurred to them that those on the outside hadn’t realised it worked this way [emphasis mine]:

it’s been like this for a really long time, and it’s something where, I don’t know, in the last year or two we basically went to the team and was like, “This doesn’t really make sense. When people say noindex, we drop it out of our index eventually, and then if it’s dropped out of our index, there’s canonical, so the links are kind of gone. Have we been recommending something that doesn’t make any sense for a while?” And they’re like, “Yeah, of course.”

More interesting quotes from the discussion

Our conversation covered quite a wide range of topics, and so I’ve included some of the other interesting snippets here:

Algorithm changes don’t map easily to actions you can take

Googlers don’t necessarily know what you need to do differently in order to perform better, and especially in the case of algorithm updates, their thinking about “search results are better now than they were before” doesn’t translate easily into “sites that have lost visibility in this update can do XYZ to improve from here”. My reading of this situation is that there is ongoing value to the work SEOs to do interpret algorithm changes and longer-running directional themes to Google’s changes to guide webmasters’ roadmaps:

[We don’t necessarily think about it as] “the webmaster is doing this wrong and they should be doing it this way”, but more in the sense “well, overall things don’t look that awesome for this search result, so we have to make some changes.” And then kind of taking that, well, we improved these search results, and saying, “This is what you as a webmaster should be focusing on”, that’s really hard.

Do Googlers understand the Machine Learning ranking factors?

I’ve speculated that there is a long-run trend towards less explainability of search rankings, and that this will impact search engineers as well as those of us on the outside. We did at least get clarity from John that at the moment, they primarily use ML to create ranking factors that feed into more traditional ranking algorithms, and that they can debug and separate the parts (rather than a ML-generated SERP which would be much less inspectable):

[It’s] not the case that we have just one machine learning model where it’s like oh, there’s this Google bot that crawls everything and out comes a bunch of search results and nobody knows what happens in between. It’s like all of these small steps are taking place, and some of them use machine learning.

And yes, they do have secret internal debugging tools, obviously, which John described as:

Kind of like search console but better

Why are result counts soooo wrong?

We had a bit of back-and-forth on result counts. I get that Google has told us that they aren’t meant to be exact, and are just approximations. So yeah, sure, but when you sometimes get a site: query that claims 13m results, you click to page 2 and find that there are only actually 11 – not 11m, actually just 11, you say to yourself that this isn’t a particularly helpful approximation. We didn’t really get any further on this than the official line we’ve heard before, but if you need that confirmed again:

We have various counters within our systems to try to figure out how many results we approximately have for some of these things, and when things like duplicate content show up, or we crawl a site and it has a ton of duplicate content, those counters might go up really high.

But actually, in indexing and later stage, I’m gonna say, “Well, actually most of these URLs are kinda the same as we already know, so we can drop them anyway.”

…

So, there’s a lot of filtering happening in the search results as well for [site: queries], where you’ll see you can see more. That helps a little bit, but it’s something where you don’t really have an exact count there. You can still, I think, use it as a rough kind of gauge. It’s like is there a lot, is it a big site? Does it end up running into lots of URLs that are essentially all eliminated in the end? And you can kinda see little bit there. But you don’t really have a way of getting the exact count of number of URLs.

More detail on the domain authority question

On domain authority question that I mentioned above (not the Moz Domain Authority proprietary metric, but the general concept of a domain-level authority metric), here’s the rest of what John said:

I don’t know if I’d call it authority like that, but we do have some metrics that are more on a site level, some metrics that are more on a page level, and some of those site wide level metrics might kind of map into similar things.

…

the main one that I see regularly is you put a completely new page on a website. If it’s an unknown website or a website that we know tends to be lower quality, then we probably won’t pick it up as quickly, whereas if it’s a really well-known website where we’ll kind of be able to trust the content there, we might pick that up fairly quickly, and also rank that a little bit better.

…

it’s not so much that it’s based on links, per se, but kind of just this general idea that we know this website is generally pretty good, therefore if we find something unknown on this website, then we can kind of give that a little bit of value as well.

…

At least until we know a little bit better that this new piece of thing actually has these specific attributes that we can focus on more specifically.

Maybe put your nsfw and sfw content on different sub-domains

I talked above about the new clarity we got on the sub-domain vs. sub-folder question and John explained some of the “is this all one site or not” thinking with reference to safe search. If you run a site with not safe for work / adult content that might be filtered out of safe search and have other content you want to have appear in regular search results, you could consider splitting that apart – presumably onto a different sub-domain – and Google can think about treating them as separate sites:

the clearer we can separate the different parts of a website and treat them in different ways, I think that really helps us. So, a really common situation is also anything around safe search, adult content type situation where you have maybe you start off with a website that has a mix of different kinds of content, and for us, from a safe search point of view, we might say, “Well, this whole website should be filtered by safe search.”

Whereas if you split that off, and you make a clearer section that this is actually the adult content, and this is kind of the general content, then that’s a lot easier for our algorithms to say, “Okay, we’ll focus on this part for safe search, and the rest is just a general web search.”

John can “kinda see where [rank tracking] makes sense”

I wanted to see if I could draw John into acknowledging why marketers and webmasters might want or need rank tracking – my argument being that it’s the only way of getting certain kinds of competitive insight (since you only get Search Console for your own domains) and also that it’s the only way of understanding the impact of algorithm updates on your own site and on your competitive landscape.

I struggled to get past the kind of line that says that Google doesn’t want you to do it, it’s against their terms, and some people do bad things to hide their activity from Google. I have a little section on this below, but John did say:

from a competitive analysis point of view, I kinda see where it makes sense

But the ToS thing causes him problems when it comes to recommending tools:

how can we make sure that the tools that we recommend don’t suddenly start breaking our terms of service? It’s like how can we promote any tool out there when we don’t know what they’re gonna do next.

We’ve come a long way

It was nice to end with a nice shout out to everyone working hard around the industry, as well as a nice little plug for our conference [emphasis mine, obviously]:

I think in general, I feel the SEO industry has come a really long way over the last, I don’t know, five, ten years, in that there’s more and more focus on actual technical issues, there’s a lot of understanding out there of how websites work, how search works, and I think that’s an awesome direction to go. So, kind of the voodoo magic that I mentioned before, that’s something that I think has dropped significantly over time.

And I think that’s partially to all of these conferences that are running, like here. Partially also just because there are lots of really awesome SEOs doing awesome stuff out there.

Personal lessons from conducting an interview on stage

Everything above is about things we learned or confirmed about search, or about how Google works. I also learned some things about what it’s like to conduct an interview, and in particular what it’s like to do so on stage in front of lots of people.

I mean, firstly, I learned that I enjoy it, so I do hope to do more of this kind of thing in the future. In particular, I found it a lot more fun than chairing a panel. In my personal experience, chairing a panel (which I’ve done more of in the past) requires a ton of mental energy on making sure that people are speaking for the right amount of time, that you’re moving them onto the next topic at the right moment, that everyone is getting to say their piece, that you’re getting actually interesting content etc. In a 1:1 interview, it’s simple: you want the subject talking as much as possible, and you can focus on one person’s words and whether they are interesting enough to your audience.

In my preparation, I thought hard about how to make sure my questions were short but open, and that they were self-contained enough to be comprehensible to John and the audience, and allow John to answer them well. I think I did a reasonable job but can definitely continue practicing to get my questions shorter. Looking at the transcript, I did too much of the talking. Having said that, my preparation was valuable. It was worth it to have understood John’s background and history first, to have gathered my thoughts, and to have given him enough information about my main lines of questioning to enable him to have gone looking for information he might not have had at his fingertips. I think I got that balance roughly right; enabling him to prep a reasonable amount while keeping a couple of specific questions for on the day.

I also need to get more agile and ask more follow-ups and continuation questions – this is hard because you are having to think on your feet – I think I did it reasonably well in areas where I’d deliberately prepped to do it. This was mainly in the more controversial areas where I knew what John’s initial line might be but I also knew what I ultimately wanted to get out of it or dive deeper into. I found it harder where I found it less expected that I hadn’t quite got 100% what I was looking for. It’s surprisingly hard to parse everything that’s just been said and figure out on the fly whether it’s interesting, new, and complete.

And that’s all from the comfort of the interrogator’s chair. It’s harder to be the questioned than the questioner, so thank you to John for agreeing to come, for his work in the prep, and for being a good sport as I poked and prodded at what he’s allowed to talk about.

I also got to see one of his 3D-printed Googlebot-in-a-skirt characters – a nice counterbalance to the gender assumptions that are too common in technical areas:

Things John didn’t say

There are a handful of areas where I wish I’d thought quicker on my feet or where I couldn’t get deeper than the PR line:

“Kind of like Search Console”

I don’t know if I’d have been able to get more out of him even if I’d pushed, but looking back at the conversation, I think I gave up too quickly, and gave John too much of an “out” when I was asking about their internal toolset. He said it was “kind of like Search Console” and I put words in his mouth by saying “but better”. I should have dug deeper and asked for some specific information they can see about our sites that we can’t see in Search Console.

John can “kinda see where [rank tracking] makes sense”

I promised above to get a bit deeper into our rank tracking discussion. I made the point that “there are situations where this is valuable to us, we feel. So, yes we get Search Console data for our own websites, but we don’t get it for competitors, and it’s different. It doesn’t give us the full breadth of what’s going on in a SERP, that you might get from some other tools.”

We get questions from clients like, “We feel like we’ve been impacted by update X, and if we weren’t rank tracking, it’s very hard for us to go back and debug that.” And so I asked John “What would your recommendation be to consultants or webmasters in those situations?”

I think that’s kinda tricky. I think if it’s your website, then obviously I would focus on Search Console data, because that’s really the data that’s actually used when we showed it to people who are searching. So, I think that’s one aspect where using external ranking tracking for your own website can lead to misleading answers. Where you’re seeing well, I’m seeing a big drop in my visibility across all of these keywords, and then you look in Search Console in it’s like, well nobody’s searching for these keywords, who cares if I’m ranking for them or not?

…

From our point of view, the really tricky part with all of these external tools is they scrape our search results, so it’s against our terms of service, and one thing that I notice kind of digging into that a little bit more is a lot of these tools do that in really sneaky ways.

(Yes, I did point out at this point that we’d happily consume an API).

They do things like they use proxy’s on mobile phones. It’s like you download an app, it’s a free app for your phone, and in the background it’s running Google queries, and sending the results back to them. So, all of these kind of sneaky things where in my point of view, it’s almost like borderline malware, where they’re trying to take user’s computers and run queries on them.

It feels like something that’s like, I really have trouble supporting that. So, that’s something, those two aspects, is something where we’re like, okay, from a competitive analysis point of view, I kinda see where it makes sense, but it’s like where this data is coming from is really questionable.

Ultimately, John acknowledged that “maybe there are ways that [Google] can give you more information on what we think is happening” but I felt like I could have done a better job on pushing for the need for this kind of data on competitive activity, and on the market as a whole (especially when there is a Google update). It’s perhaps unsurprising that I couldn’t dig deeper than the official line here, nor could I have expected to get a new product update about a whole new kind of competitive insight data, but I remain a bit unsatisfied with Google’s perspective. I feel like tools that aggregate the shifts in the SERPs when Google changes their algorithm and tools that let us understand the SERPs where our sites are appearing are both valuable and Google is fixated on the ToS without acknowledging the ways this data is needed.

Are there really strong advocates for publishers inside Google?

John acknowledged being the voice of the webmaster in many conversations about search quality inside Google, but he also claimed that the engineering teams understand and care about publishers too:

the engineering teams, [are] not blindly focused on just Google users who are doing searches. They understand that there’s always this interaction with the community. People are making content, putting it online with the hope that Google sees it as relevant and sends people there. This kind of cycle needs to be in place and you can’t just say “we’re improving search results here and we don’t really care about the people who are creating the content”. That doesn’t work. That’s something that the engineering teams really care about.

I would have liked to have pushed a little harder on the changing “deal” for webmasters as I do think that some of the innovations that result in fewer clicks through to websites are fundamentally changing that. In the early days, there was an implicit deal that Google could copy and cache webmasters’ copyrighted content in return for driving traffic to them, and that this was a socially good deal. It even got tested in court [Wikipedia is the best link I’ve found for that].

When the copying extends so far as to remove the need for the searcher to click through, that deal is changed. John managed to answer this cleverly by talking about buying direct from the SERPs:

We try to think through from the searcher side what the ultimate goal is. If you’re an ecommerce site and someone could, for example, buy something directly from the search results, they’re buying from your site. You don’t need that click actually on your pages for them to actually convert. It’s something where when we think that products are relevant to show in the search results and maybe we have a way of making it more such that people can make an informed choice on which one they would click on, then I think that’s an overall win also for the whole ecosystem.

I should have pushed harder on the publisher examples – I’m reminded of this fantastic tweet from 2014. At least I know I still have plenty more to do.

Thank you to Mark Hakansson for the photos [close-up and crowd shot].

So. Thank you John for coming to SearchLove, and for being as open with us as you were, and thank you to everyone behind the scenes who made all this possible.

Finally: to you, the reader – what do you still want to hear from Google? What should I dig deeper into and try to get answers for you about next time? Drop me a line on Twitter.