How to estimate SEO traffic

Whether you’re expanding an existing business into a new market, bringing out a new product range, or building a brand new website from scratch, a key question you’ll need to answer is how much organic search traffic is reasonable to expect, and what the ceiling on that number might be. It’s also useful for an established site to know how close to the ceiling you are. In this post, I outline a process for coming to a reasonable estimate for that number.

How we do it

This is all an estimate, while it tries to be as rigorous as possible there are a lot of unknowns that could cause the estimate to be off in either direction. The intention is to get a ballpark estimate in order to set expectations and give an indication of the level of investment that should be devoted to a site.

In order to work out how much organic traffic we can get, we need four things:

1. A list of keywords we can reasonably expect to rank for

In order to gather this list of keywords, a level of keyword research is required. In order to get the most accurate estimates, we want to aim for as wide as possible a set of keywords that are actually relevant to the site we’re proposing.

The intention of this methodology is focussed on the core non-branded keywords a site could rank for, e.g. the names of products and services offered by your website. Branded keywords should be considered separately, as they are much more prone to changes in search volume for a new brand being launched.

Tangential or unrelated keywords (that might be the subject of a blog or resources page) are generally better considered separately, as there’s often an unbounded number of these. My recommendation would be that when deciding on content to create, to run this process at a smaller scale over those particular keywords to estimate the organic traffic available for a piece of content.

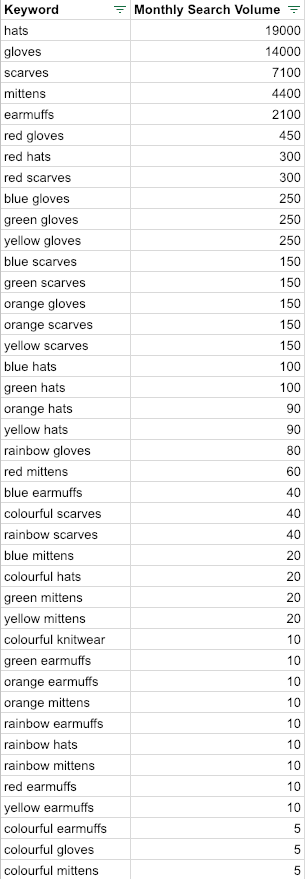

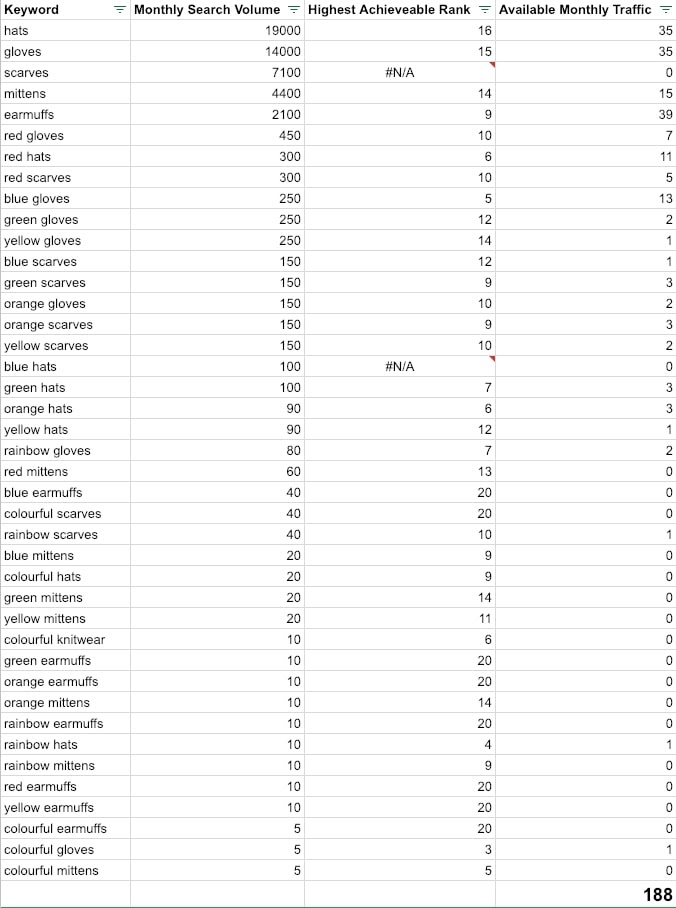

Below is a scaled-down example list of keywords that we could put together for a site selling colourful knitwear. In reality, your keyword list should probably run to hundreds or thousands of keywords.

2. Search volume for each keyword in our list

There are many SEO tools available to get search volume data from. We like to use Ahrefs, but any SEO tool will have this data available. Google Keyword Planner also gives ranges of keyword volume for free, although that data tends to be grouped into ranges. For the purposes of this exercise, you can take the midpoint of those ranges.

No keyword volume source, including Google, will be completely accurate, and they will generally give a monthly average not taking into account seasonal fluctuations. You should also be aware of keyword volumes for close variants being grouped together – there’s a risk you’ll be double counting without taking this into account.

3. For each keyword, the highest ranking we can reasonably expect to achieve

Once we have our list of keywords with search volume, we should look at what’s currently ranking in the search results, in order to estimate where our site could expect to rank.

Note that what I’m about to outline is not a rigorous process. As mentioned above, the idea is to get a ballpark figure, and this method relies on some assumptions that are not to be held as claims about how SEO works. Specifically, we’re assuming that:

- We can make pages of high enough quality, and well-targeted enough, to rank well for a given keyword

- With this being the case, we could aspire to rank as high as anyone currently ranking in the SERP who has a less strong domain (in terms of backlink profile) than our site.

Of course, SEO is realistically much more complicated than that, but for the purposes of developing an estimate, we can hold our nose and go ahead with these assumptions.Want more advice like this in your inbox? Join the monthly newsletter.

This process is replicating some of the inputs that a lot of keyword tools use to calculate their difficulty scores. The reason we’re doing this is that we need to use a specific ranking position in order to project the amount of traffic we can get, which difficulty scores don’t give us.

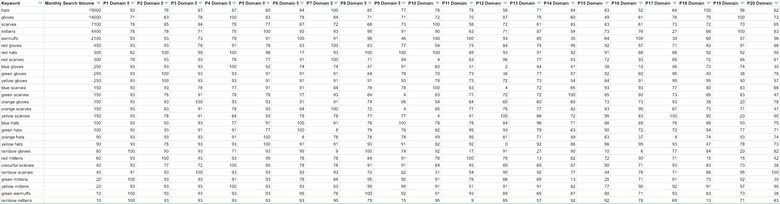

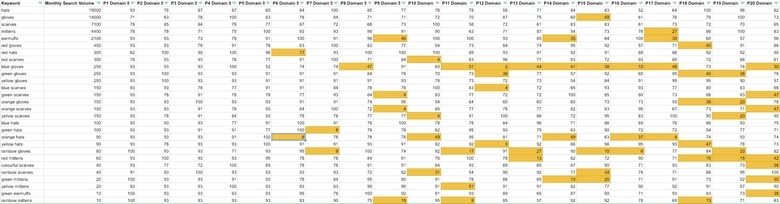

In order to work out where can rank, we need to see who’s currently ranking for each keyword. Many rank tracking tools (including STAT, which we use at Brainlabs) will give you this data, as will search analytics tools such as Ahrefs and SEMrush. Let’s take the top 20 ranking URLs for each keyword. Don’t forget to get the rankings for the country/market you’re doing this analysis on!

We then want to cross-check these URLs against backlink data. Select your favourite backlink tool (Moz/Ahrefs/Majestic), and look up the domain-level strength of each domain that ranks for your keywords. If you’re doing this at a large scale, I’d recommend using the API of your tool, or URL Profiler. For this example, I’ve used Ahrefs domain rating (with 100 for any ranking positions that don’t have an organic result).

We are making the assumption that we can rank as well as any currently ranking site with a lower domain score than our site. If you’re running this analysis for a new site that doesn’t exist (and therefore doesn’t have a backlink profile), you can use a hypothetical target backlink score based on what you believe to be achievable.

For each keyword, find the highest ranking position which is currently occupied by a weaker domain than yours. If none such pages exist in the top 20 results, we can discount those keywords from our analysis, and assume that we can’t rank for them.

4. For each ranking position, the amount of traffic we could expect to get each month

Now that we have our highest achievable rank, and monthly search volume, we can combine them to get the maximum monthly traffic we can expect from each keyword. To do this, we use a clickthrough rate model to estimate what percentage of searches lead to a click on a search result in each ranking position.

There are some very good public resources – I like Advanced Web Ranking’s data. While generic data like this will never be perfect, it will get us a good-enough estimate that we can use for this task.

If we multiply the monthly search volume by the click-through rate estimate for the highest achievable ranking position, that gives us the traffic expectation for each keyword. Add these together, and we get the total traffic we can expect for the site. We’re done!

What we can do with this information

Now that we have a number for the traffic we can expect, what can we do with it? Let’s revisit our assumptions. We’ve gone into this analysis assuming a static list of keywords, and a fixed backlink profile. What if we change that up?

What if our backlink profile improves?

Because we’ve set this up as a model in a spreadsheet, it’s relatively simple to tweak the domain score rating. By doing this, we can see how performance would improve by, for example, adding 10 points to our domain rating. The sensitivity of the traffic levels to small changes in this would be a good indication that it’s a good idea to invest in growing your backlink profile.

What if we target a broader range of keywords?

The other way we could get more traffic would be by targeting more keywords. This could be via blog or resources style content, or by adding more transactional keywords targeting more terms. In order to update the model, it’s a case of essentially repeating the above process with a new list of keywords, and adding it into the initial model. This can be a great way to identify new keyword opportunities.

Improving the model

As mentioned above, this was (intentionally) a very simple model. We’ve glossed over a lot of nuance in order to come to a ballpark figure of achievable search traffic. For a more rigorous estimate of the available search traffic, there are a few modifications I would make:

Use a tailored CTR model

The AWR link I provided above provides a good breakdown of clickthrough rate by industry and device, as well as different types of search intent. If you have the time, you should go through your keywords and pick out the appropriate CTR curve per keyword.

Break it up by device type

Some keywords can have dramatically different search results by device type. Tracking keywords separately on mobile and desktop, as is possible using some rank tracking tools including STAT, would allow you to separately estimate the highest achievable rank on each device. Unfortunately, there’s no source of search volume that I’m aware of that allows you to split search volumes by device type, so you can split the search volume in half between mobile and desktop, or in a different proportion if you know that the particular space has a bias to mobile or desktop.

Look at the SERPs

The above methodology focuses on backlinks as the key decider of whether a page can rank or not. This is not how search works – there are hundreds of factors that go into deciding who ranks where for which keywords. Looking more closely at who is currently ranking will give great insight, as it might show opportunities that wouldn’t otherwise be apparent by looking at links alone.

For example, if a weaker domain ranks in the top three positions for a keyword that it is an exact match for, which would indicate that those results aren’t a good opportunity. Conversely, if the pages ranking for a given keyword aren’t a very good match for the intent of the search, and you can create a page that matches the intent better, that represents a better opportunity than link numbers would suggest.

Also, if all of the pages ranking for a search term aren’t a close match to your site (e.g. if all of the results are informational for a search that you would target with a transactional page), it’s probably not a good opportunity and you should remove it from your list.

Conclusion

Thanks for reading through this post! I’d love to hear if anyone has any feedback on the process or ways it could be improved!